Distributional and Spatial-Temporal Robust Representation Learning for Transportation Activity Recognition

DSTRR

DSTRR

Abstract

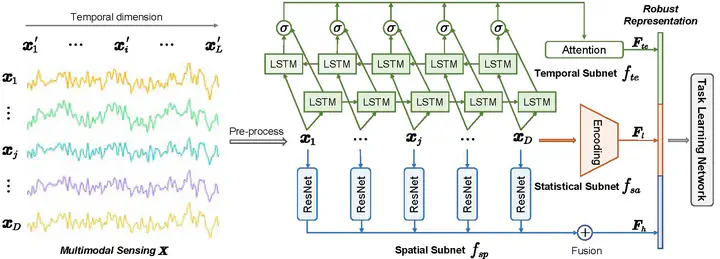

Transportation activity recognition (TAR) provides valuable support for intelligent transportation applications, such as urban transportation planning, driving behavior analysis and traffic prediction. There are many advantages of movable sensor-based TAR, and the key challenge is to capture salient features from segmented data for representing diverse patterns of activity. Although existing methods based on statistical information are efficient, they usually rely on domain knowledge to construct high-quality features manually. Likewise, the methods based on spatial-temporal relationships achieve good performance but fail to extract statistical features. The features extracted by these two methods have proven to be crucial for the classification of activity. How to combine them to acquire a more robust representation remains an open question. In this work, we introduce a novel parallel model named Distributional and Spatial-Temporal Robust Representation (DSTRR), which combines automatic learning of statistical, spatial and temporal features into a unified framework. This method leads to three optimized subnets and thus obtains a robust representation specific to TAR. Extensive experiments performed on three public datasets show that DSTRR is state-of-the-art compared to existing methods. The results of ablation study and visualization not only demonstrate the effectiveness of each component in DSTRR, but also show the model remains robust to a wide range of parameter variations.